Vacuum suction is the force that arises when there is a difference in pressure between the inside and the outside of a system. This force has applications in a wide range of fields such as manufacturing, medicine, and science. Measuring vacuum suction is a critical aspect of many of these applications, as it allows for the precise control and optimization of the force for a particular use case. In this article, we will discuss the various methods used to measure vacuum suction and the factors that affect the accuracy of these measurements. So how to measure vacuum suction?

Vacuum Measurement Units

The measurement of vacuum suction is typically expressed in units of pressure, rather than force. The most common unit of pressure used to measure vacuum suction is the Pascal (Pa). The Pascal is the SI unit of pressure and is defined as the force of one Newton acting on one square meter of surface area. Another common unit of pressure used to measure vacuum suction is the Torr. The Torr is defined as 1/760 of an atmosphere, or approximately 133.3 Pa.

Measurement Techniques

There are several techniques used to measure vacuum suction, each with its advantages and disadvantages. The choice of measurement technique depends on several factors, such as the level of vacuum required, the accuracy required, and the nature of the system being measured.

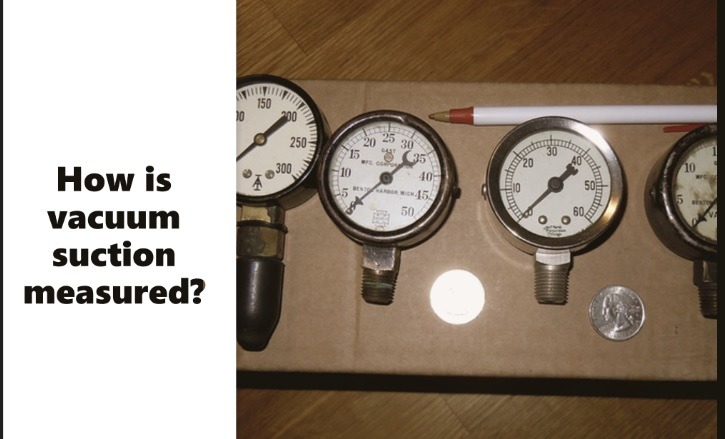

1. Mechanical Gauges

Mechanical gauges are the most basic type of vacuum gauge and rely on mechanical movements to measure the level of vacuum. These gauges are typically based on the principle of a spring or a diaphragm being deformed by the pressure difference between the inside and outside of the system. The deformation of the spring or diaphragm is measured and calibrated to give a reading of the vacuum level.

The main advantage of mechanical gauges is their low cost and ease of use. However, they have limited accuracy and are susceptible to drift over time. Mechanical gauges are typically used in applications where a rough indication of the vacuum level is sufficient.

2. Thermal Conductivity Gauges

Thermal conductivity gauges measure the level of vacuum by measuring the thermal conductivity of the gas in the system. The gauge consists of two elements: a heated filament and a temperature sensor. When the gas in the system is at a high pressure, it conducts heat away from the filament, causing the temperature sensor to detect a drop in temperature. As the pressure in the system decreases, less heat is conducted away from the filament, and the temperature sensor detects an increase in temperature. The change in temperature is used to calculate the level of vacuum.

The main advantage of thermal conductivity gauges is their high accuracy and stability. They are commonly used in high-precision applications such as semiconductor manufacturing, where accurate control of the vacuum level is critical.

3. Ionization Gauges

Ionization gauges use the ionization of gas molecules to measure the level of vacuum. The gauge consists of a cathode and an anode separated by a grid. When a high voltage is applied between the cathode and the grid, electrons are emitted from the cathode and accelerated towards the grid. The electrons collide with gas molecules in the system, causing ionization. The ions produced by ionization are attracted to the anode, creating a current that is proportional to the level of vacuum in the system.

The main advantage of ionization gauges is their high sensitivity and accuracy at low pressures. They are commonly used in ultra-high vacuum applications such as particle accelerators and space simulation chambers.

Factors Affecting Accuracy

Several factors can affect the accuracy of vacuum suction measurements. One of the most critical factors is the type of gauge used. As discussed above, different types of gauges have different levels of accuracy and sensitivity. The choice of gauge should be based on the level of accuracy required for the application.

Another factor that can affect the accuracy of vacuum suction measurements is the temperature of the system being measured. The temperature of the system can affect the viscosity and thermal conductivity of the gas, which can, in turn, affect the accuracy of the gauge. To minimize the impact of temperature on the accuracy of vacuum suction measurements, gauges are often designed to operate at a constant temperature.

The composition of the gas in the system can also affect the accuracy of vacuum suction measurements. For example, certain gases, such as helium, have a low molecular weight and can escape through small leaks in the system. This can result in an artificially low vacuum reading. To minimize the impact of gas composition on vacuum suction measurements, it is essential to use gauges that are calibrated for the specific gas mixture in the system.

Another factor that can affect the accuracy of vacuum suction measurements is the presence of magnetic fields. Magnetic fields can interfere with the operation of certain types of gauges, such as thermal conductivity gauges. To minimize the impact of magnetic fields on the accuracy of vacuum suction measurements, gauges are often shielded from external magnetic fields.

Conclusion

In conclusion, measuring vacuum suction is critical for many applications in manufacturing, medicine, and science. By understanding the various measurement techniques and factors that affect accuracy, it is possible to choose the appropriate gauge and optimize vacuum suction for a particular use case.